NeRF sample based on Nvidia Instant-ngp

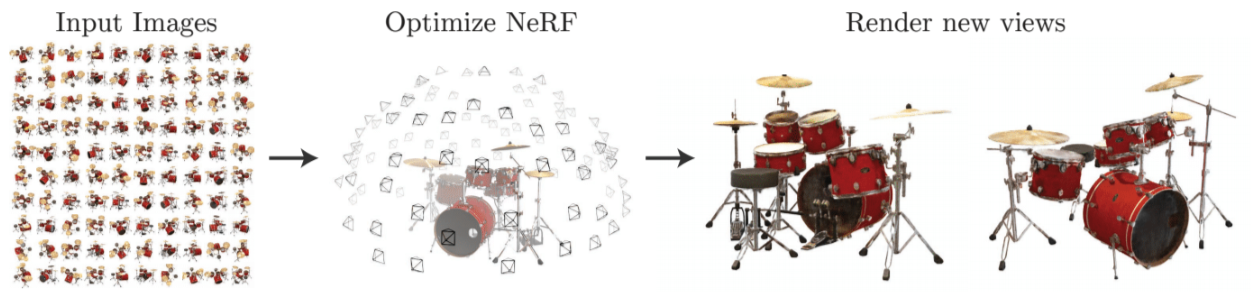

Neural Radiance Fields (NRF) is a technique in computer graphics and deep learning used for generating photorealistic 3D images and scenes from input data. The technique was proposed by Mildenhall et al. in a research paper published in 2020.

The idea behind NRF is to use a neural network to learn the radiance emitted by an object in a 3D scene based on input data such as images and videos. The neural network learns the relationship between the radiance of the object and its position in space, which allows for generating new views of the scene in real-time.

To generate a 3D image with NRF, a 3D mesh of the object of interest is first constructed, and images are taken from multiple angles of the scene using techniques such as photography or video capture. These images are then fed into the neural network, which uses the information to build a 3D model of the object and learn the relationship between radiance and position in space.

Once the neural network has been trained, it can be used to generate new views of the scene in real-time, simply by inputting a position in space and generating an image from that position.

Training time can be very expensive, taking several hours or even minutes to train the model with a simple scene. So, since the first paper was released, new studios and projects have been appearing that explore different ways of training the model as Nvidia Instant NGP.

Nvidia Instant NGP uses the Nvidia graphic cards power to train a model in seconds or minutes and after that, allows you to load this trained model directly, reducing the time to train and render a model.

We have created an Evergine sample that combines the Nvidia Instant-ngp with Evergine, allowing you to create an Evergine project where you integrate a NeRF scene render.

In this release, we introduce the NeRF sample that allows you to explore this technology using Evergine. In the example, you can navigate through a NeRF scene and use this code base to extend and explore new possibilities.

We have created a code example that showcases an industrial heat pump that has been generated using a neural radiance field model. In the example, users can orbit around the model to visualize how the trained model can generate any viewing angle of the heat pump. The images generated are synthetic, meaning that a specific camera angle may never have been captured in the training images, but the AI model is able to predict it.

You can generate your own NeRF models and add them to the sample. You will need to record a video or capture a collection of images with your mobile device or video camera of the object or environment you wish to represent. Then, you can train the model using Instant-ngp and generate the transform.json files containing the positions of each image used to train the model, along with the base.ingp file that includes the training performed on the network (we recommend performing more than 35,000 iterations to ensure good model definition).

Our recommendations for capturing images or videos for generating a NeRF model would be:

- Ensure good lighting.

- Use a high-definition HD or 4K camera.

- Use a wide-angle lens.

- Keep a low shutter speed (to avoid blurry images)

- Keep the exposure consistent throughout the recording.

We keep working on this new line, and we hope to add more samples that combine NeRF technology with Evergine in the next months. Stay tuned because we publish more coming soon.