HandTracking on Quest

In this Evergine release, the OpenXR integration now has Hand Tracking capability. Hand Tracking in XR applications allows users to interact with virtual objects and environments using their hands. This is a new way to interact with your virtual scene without needing typical hand controllers.

Meta Quest devices will benefit the most from this feature.

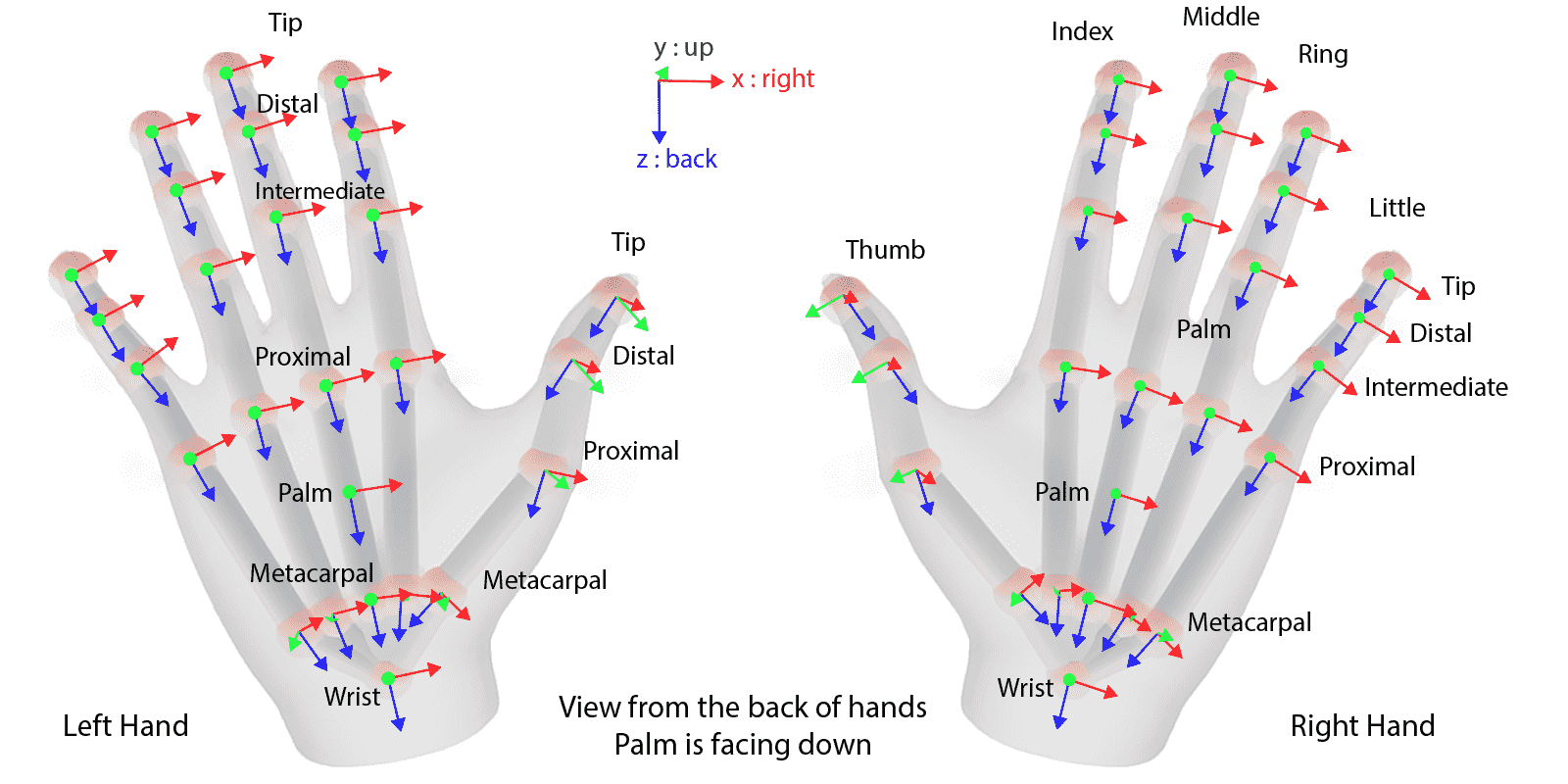

Supported Hand Joints

Evergine provides an updated list of hand joints. It defines 26 joints for hand tracking: 4 joints for the thumb finger, 5 joints for the other four fingers, and the wrist and palm joints:

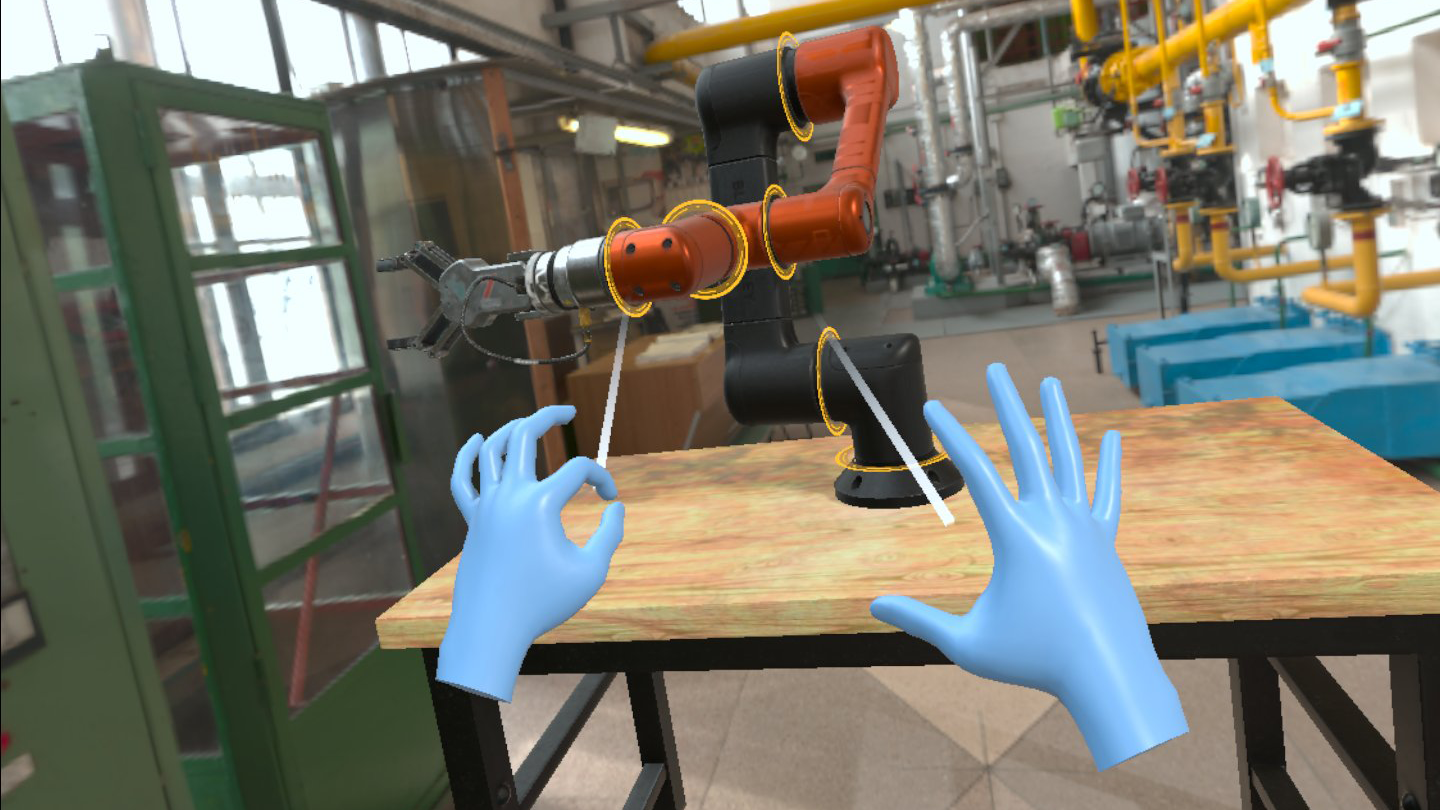

Render Hands

Using the new XRDeviceRenderableModel component adds the possibility to obtain a renderable model associated to an XR controller. In the case of articulated hands, it provides a skinned mesh of the hand following the user hand poses:

New OpenXR supported extensions

The following OpenXR extensions have been supported to provide Hand Tracking:

- XR_EXT_hand_tracking: Common extension that offers the hand mesh pose.

- XR_FB_hand_tracking_aim: Meta proprietary extension that provides a gesture recognition layer.

- XR_FB_hand_tracking_mesh: Meta proprietary extension that provides a skinned model to render the hands on Quest devices.